Greece’s decision to deploy machine learning in pandemic surveillance will be much-studied around the world.Credit: Konstantinos Tsakalidis/Bloomberg/Getty

A few months into the COVID-19 pandemic, operations researcher Kimon Drakopoulos e-mailed both the Greek prime minister and the head of the country’s COVID-19 scientific task force to ask if they needed any extra advice.

Drakopoulos works in data science at the University of Southern California in Los Angeles, and is originally from Greece. To his surprise, he received a reply from Prime Minister Kyriakos Mitsotakis within hours. The European Union was asking member states, many of which had implemented widespread lockdowns in March, to allow non-essential travel to recommence from July 2020, and the Greek government needed help in deciding when and how to reopen borders.

Greece, like many other countries, lacked the capacity to test all travellers, particularly those not displaying symptoms. One option was to test a sample of visitors, but Greece opted to trial an approach rooted in artificial intelligence (AI).

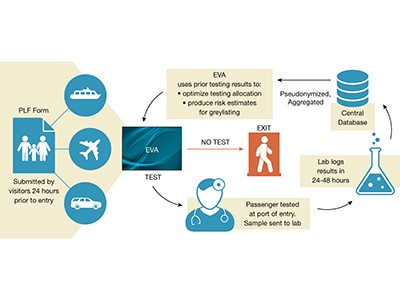

Between August and November 2020 — with input from Drakopoulos and his colleagues — the authorities launched a system that uses a machine-learning algorithm to determine which travellers entering the country should be tested for COVID-19. The authors found machine learning to be more effective at identifying asymptomatic people than was random testing or testing based on a traveller’s country of origin. According to the researchers’ analysis, during the peak tourist season, the system detected two to four times more infected travellers than did random testing.

Read the paper: Efficient and targeted COVID-19 border testing via reinforcement learning

The machine-learning system, which is among the first of its kind, is called Eva and is described in Nature this week (H. Bastani et al. Nature https://doi.org/10.1038/s41586-021-04014-z; 2021). It’s an example of how data analysis can contribute to effective COVID-19 policies. But it also presents challenges, from ensuring that individuals’ privacy is protected to the need to independently verify its accuracy. Moreover, Eva is a reminder of why proposals for a pandemic treaty (see Nature 594, 8; 2021) must consider rules and protocols on the proper use of AI and big data. These need to be drawn up in advance so that such analyses can be used quickly and safely in an emergency.

In many countries, travellers are chosen for COVID-19 testing at random or according to risk categories. For example, a person coming from a region with a high rate of infections might be prioritized for testing over someone travelling from a region with a lower rate.

By contrast, Eva collected not only travel history, but also demographic data such as age and sex from the passenger information forms required for entry to Greece. It then matched those characteristics with data from previously tested passengers and used the results to estimate an individual’s risk of infection. COVID-19 tests were targeted to travellers calculated to be at highest risk. The algorithm also issued tests to allow it to fill data gaps, ensuring that it remained up to date as the situation unfolded.

During the pandemic, there has been no shortage of ideas on how to deploy big data and AI to improve public health or assess the pandemic’s economic impact. However, relatively few of these ideas have made it into practice. This is partly because companies and governments that hold relevant data — such as mobile-phone records or details of financial transactions — need agreed systems to be in place before they can share the data with researchers. It’s also not clear how consent can be obtained to use such personal data, or how to ensure that these data are stored safely and securely.

A machine-learning algorithm to target COVID testing of travellers

Eva was developed in consultation with lawyers, who ensured that the program abided by the privacy protections afforded by the EU’s General Data Protection Regulation (GDPR). Under the GDPR, organizations, such as airlines, that collect personal data need to follow security standards and obtain consent to store and use the data — and to share them with a public authority. The information collected tends to be restricted to the minimum amount required for the stated purpose.

But this is not necessarily the case outside the EU. Moreover, techniques such as machine learning that use AI are limited by the quality of the available data. Researchers have revealed many instances in which algorithms that were intended to improve decision-making in areas such as medicine and criminal justice reflect and perpetuate biases that are common in society. The field needs to develop standards to indicate when data — and the algorithms that learn from them — are of sufficient quality to be used to make important decisions in an emergency. There must also be a focus on transparency about how algorithms are designed and what data are used to train them.

The hunger with which Drakopoulos’s offer of help was accepted shows how eager policymakers are to improve their ability to respond in an emergency. As such algorithms become increasingly prominent and more widely accepted, it could be easy for them to slide, unnoticed, into day-to-day life, or be put to nefarious use. One example is that of facial-recognition technologies, which can be used to reduce criminal behaviour, but can also be abused to invade people’s privacy (see Nature 587, 354–358; 2020). Although Eva’s creators succeeded in doing what they set out to do, it’s important to remember the limitations of big data and machine learning, and to develop ways to govern such techniques so that they can be quickly — and safely — deployed.

Despite a wealth of methods for collecting data, many policymakers have been unable to access and harness data during the pandemic. Researchers and funders should start laying the groundwork now for emergencies of the future, developing data-sharing agreements and privacy-protection protocols in advance to improve reaction times. And discussions should also begin about setting sensible limits on how much decision-making power an algorithm should be given in a crisis.

A machine-learning algorithm to target COVID testing of travellers

A machine-learning algorithm to target COVID testing of travellers

Read the paper: Efficient and targeted COVID-19 border testing via reinforcement learning

Read the paper: Efficient and targeted COVID-19 border testing via reinforcement learning

Everyone should decide how their digital data are used — not just tech companies

Everyone should decide how their digital data are used — not just tech companies

The powers and perils of using digital data to understand human behaviour

The powers and perils of using digital data to understand human behaviour

Can tracking people through phone-call data improve lives?

Can tracking people through phone-call data improve lives?

Am I arguing with a machine? AI debaters highlight need for transparency

Am I arguing with a machine? AI debaters highlight need for transparency